Robotic Imaging: Seeing in New Ways

We have multiple openings in robotic imaging. Our team combines optics, algorithms, and machine learning to make new and better ways for robots to see.

Our students push the limits of perception in robotics. Excellent candidates will qualify for full tuition, stipend, and top-up scholarships.

- Machine learning for camera design and interpretation

- Novel cameras: computational imaging devices spanning single-photon sensors, nano-optics, solid-state LiDAR, femtosecond imaging, event cameras, multispectral, light field imaging…

- Vision for small and fast robots: flying, driving, fast manipulation

- Long-range imaging in participating media: underwater, fog, rain

- Active and interactive imaging and control

- Streaming / live virtual reality and telepresence, augmented reality for the vision impaired

- Light field video processing: using 4D cameras to see where others cannot

- Autonomous driving, underwater survey, drone delivery, robotic surgery

About the lab: https://roboticimaging.org

Contact: donald.dansereau@sydney.edu.au

Control of Walking Robots

As robots move out of controlled environments like factories and into the wider world, many creative methods of locomotion are being explored. In particular, legged robots are suitable for traversing terrain too rough or irregular for wheels. The dynamics of legged locomotion presents many exciting challenges for planning and control: it is nonlinear, uncertain, high-dimensional, non-smooth, and underactuated.

Candidates will investigate one or more of:

- Integrated perception and motion planning over uneven terrain

- Optimization and learning paradigms for provably-robust control policies

- Experimental investigations with the ACFR’s Agility Robotics Cassie robot (pictured left)

Contact: ian.manchester@sydney.edu.au

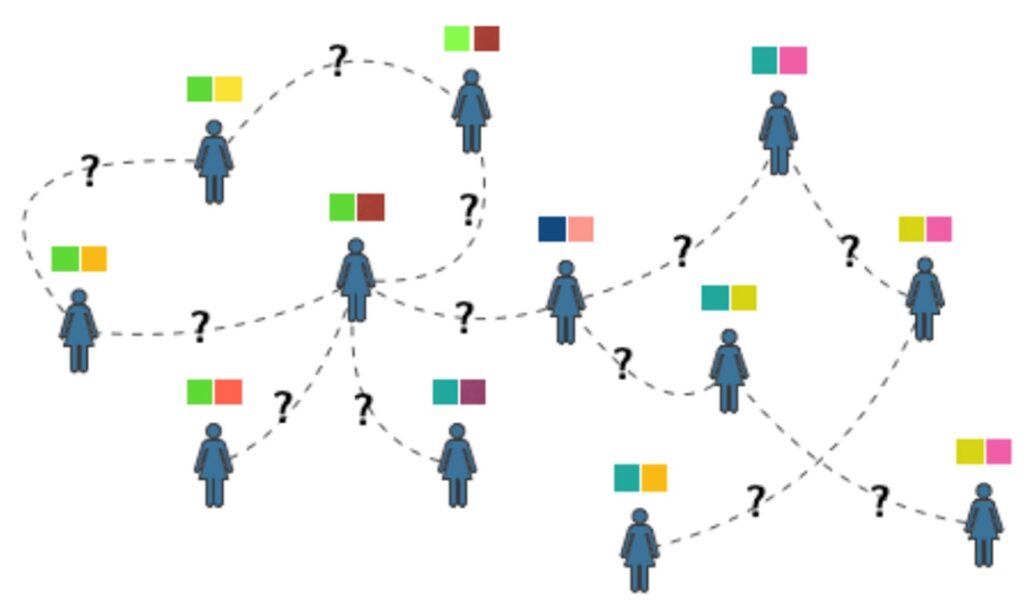

Safeguarding Our Online Social Networks

Today online social networks have fundamentally changed the way how our society is organized. However, the presence of misinformation and disinformation in forms of fake customer reviews, manipulated news, and disingenuous recommendations etc. are posing systemics risks on the well-being of our social members in the short term and the values our society holds in the long term. More importantly, the social interactions between peers may accelerate the spread of misinformation and amplify the harm from adversarial actions as we live in an interconnected network. Recently, in collaboration with researchers from University of Texas Austin, University of Oxford, and Chinese University of Hong Kong we uncovered the hidden risks of our social interactions being identified from public records on social networks (https://dx.doi.org/10.2139/ssrn.3875878). This projects aims to develop algorithms and optimization frameworks by which we slow down the spread of misinformation and safeguard our social networks, using tools from control theory, optimization, and machine learning.

Contact: guodong.shi@sydney.edu.au

LiDAR Pointcloud Perception and Deep Learning in Forests

The ACFR is currently engaged in several national and international collaborations with research, industry and government partners in sensing and robotic applications in commercial forestry, forest health, ecology and management. Forests are structurally diverse environments that pose unique challenges for robotic sensing and perception. This research will aim to develop new methods for sensing, perception and navigation using LiDAR, photogrammetry and hyperspectral imaging in forests.

Candidates will investigate one or more of the following topics:

- New developments in deep learning models for 3D pointcloud data

- Human-computer interaction for 3D deep learning using virtual reality

- Applications of 3D robotic perception and learning in forest environments

Contact: mitch.bryson@sydney.edu.au

Sensing and Mapping the Dynamic World

Dynamic scenes challenge a number of mature research areas in computer vision and robotics, including simultaneous localisation and mapping (SLAM), 3D reconstruction, multiple object tracking etc. Most of the existing solutions to these problems rely on assumptions about the static nature of either the environment or the sensing modality. This drastically reduces the amount of information that can be obtained in complex environments cluttered with moving objects. To achieve safe autonomy, obstacle avoidance and path planning techniques require this information to be integrated.

Candidates will investigate one or more of the following topics:

- Robust segmentation and tracking of moving objects sensed by a sensor (camera, laser) in motion.

- Simultaneous localization and mapping of dynamic environments.

- Novel representations of dynamic scenes directly connected with the requirements of the autonomous vehicles.

Contact: viorela.ila@sydney.edu.au

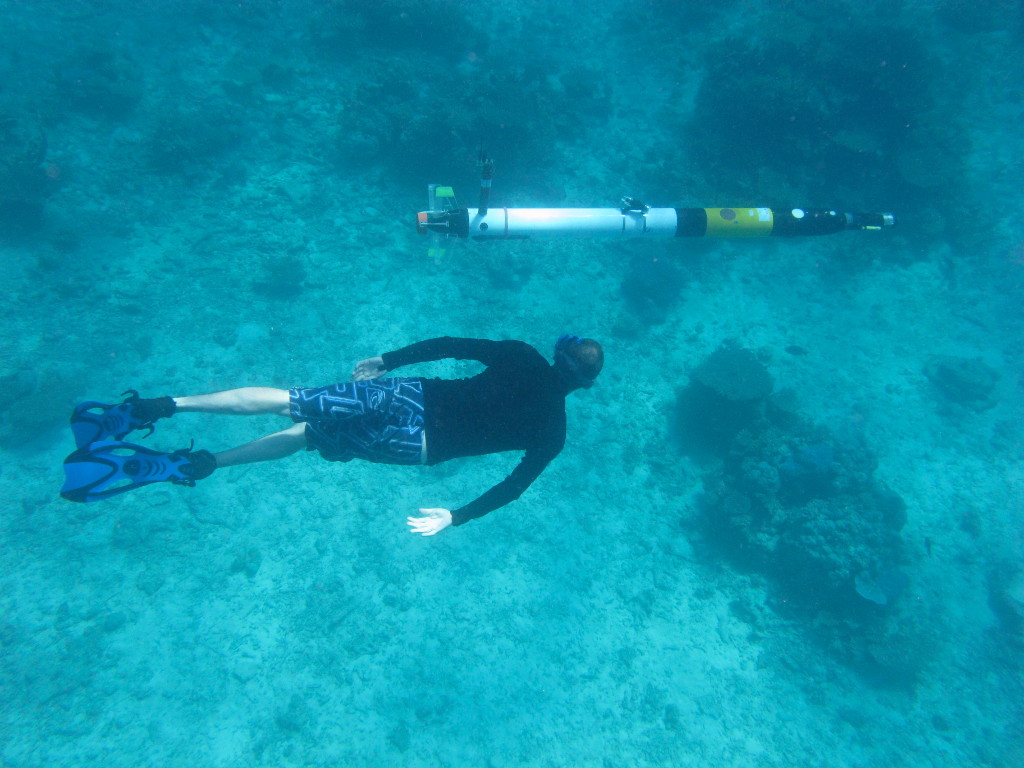

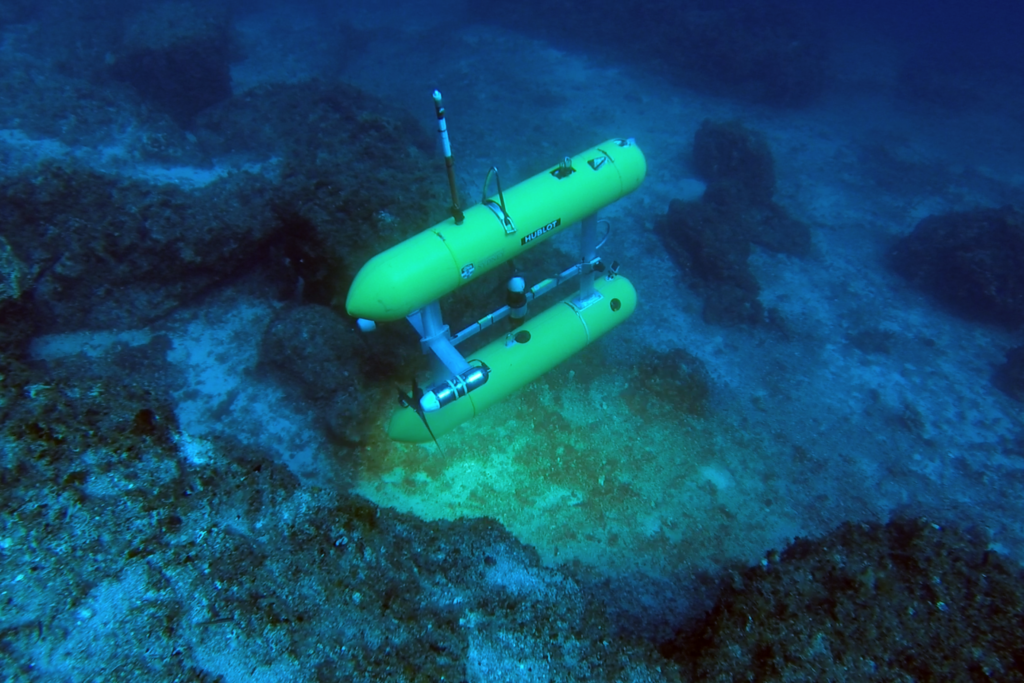

Marine Robotics

We undertake fundamental and applied research in a variety of areas related to the development and deployment of marine autonomous systems. The ACFR, as operator of a major national Autonomous Underwater Vehicle (AUV) Facility, conducts AUV-based surveys at sites around Australia and overseas. These AUV surveys are designed to collect high-resolution stereo imagery and oceanographic data to support studies in the fields of engineering science, ecology, biology, geoscience, archaeology and industrial applications.

One of the major challenges with this program is managing, searching through and visualizing the resulting data streams. Our recent research has focused on generating high-fidelity, three-dimensional models of the seafloor; precisely matching survey locations across years to allow scientists to understand variability in these environments; and identifying patterns in the data that facilitate automated classification of the resulting image sets.

Providing precise navigation and high-resolution imagery lends itself to novel methods for data discovery and visualization. As a result, we have a strong focus on methods for interacting with and discovering patterns in the data using machine learning techniques. We also have a strong record of engagement with end users in a variety of domains interested in understanding marine environments.

Candidates will investigate one or more of the following topics:

- Platforms and sensing: autonomous marine vehicles, high-resolution stereo imaging, hyperspectral, lightfield imaging

- Navigation and mapping: SLAM using both visual and acoustic data, visualization

- Planning and Control: information based planning, low level control and in particular in manipulation and intervention

- Data Analytics: automated processing of large volumes of data, automatic registration of multi-year datasets, identification of direct change as well as distributions of organisms

Contact: stefan.williams@sydney.edu.au

Intelligent Transportation Systems

Driving the development of future mobility

Intelligent transportation systems (ITS) research is vital for improving future mobility around Australia. By investigating and developing new technologies, ITS can help make our roads and transport networks safer, more efficient and more sustainable.

Research in ITS now will pay dividends for generations to come. It is essential for keeping Australia moving forward into the future – literally!

Our research spans various autonomous vehicle technologies, including:

- Sensor fusion for perception and tracking

- Autonomous vehicle navigation and control

- Pedestrian intention prediction

- Connected autonomy, cooperative perception and planning

- Designing the interactions between vehicles and pedestrians

Contact: stewart.worrall@sydney.edu.au

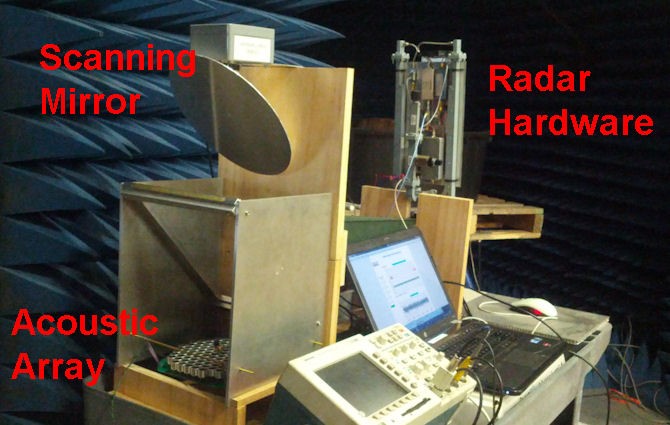

Radar Acoustic Systems

- This project aims to quantify small-scale air turbulence using Doppler returns from a Radar Acoustic Sounding System (RASS) at standoff ranges. The newly discovered ability of strobed Schlieren to provide images of ultrasonic sound fields is significant as it will be exploited to visualise the changes in this field structure when passing through turbulent air. These changes will then be correlated with the simultaneously measured micro- Doppler returns from the RASS. Once this relationship is understood, we can dispense with the Schlieren setup, and rely on the RASS to provide a measure of the air turbulence.

Contact: graham.brooker@sydney.edu.au