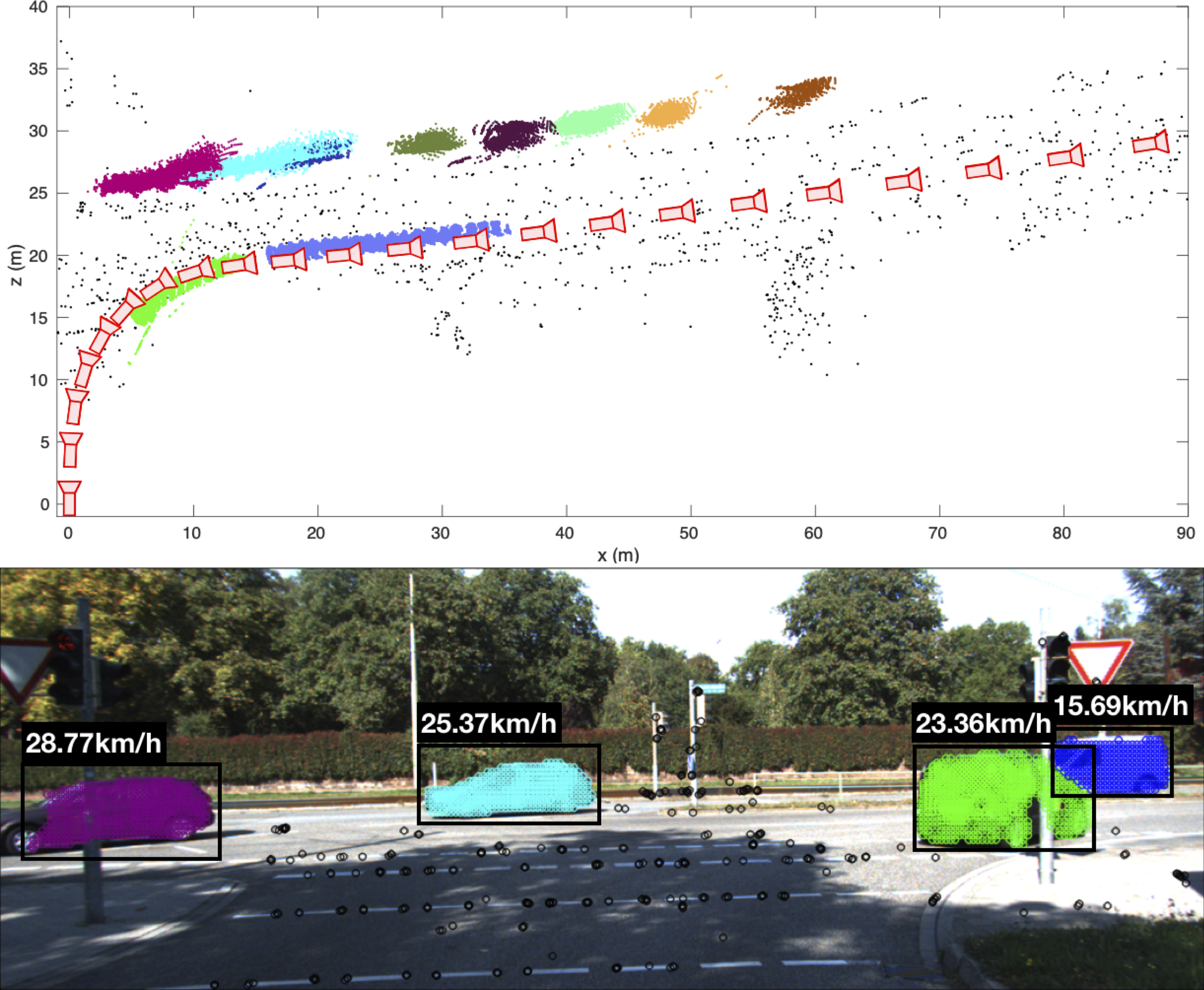

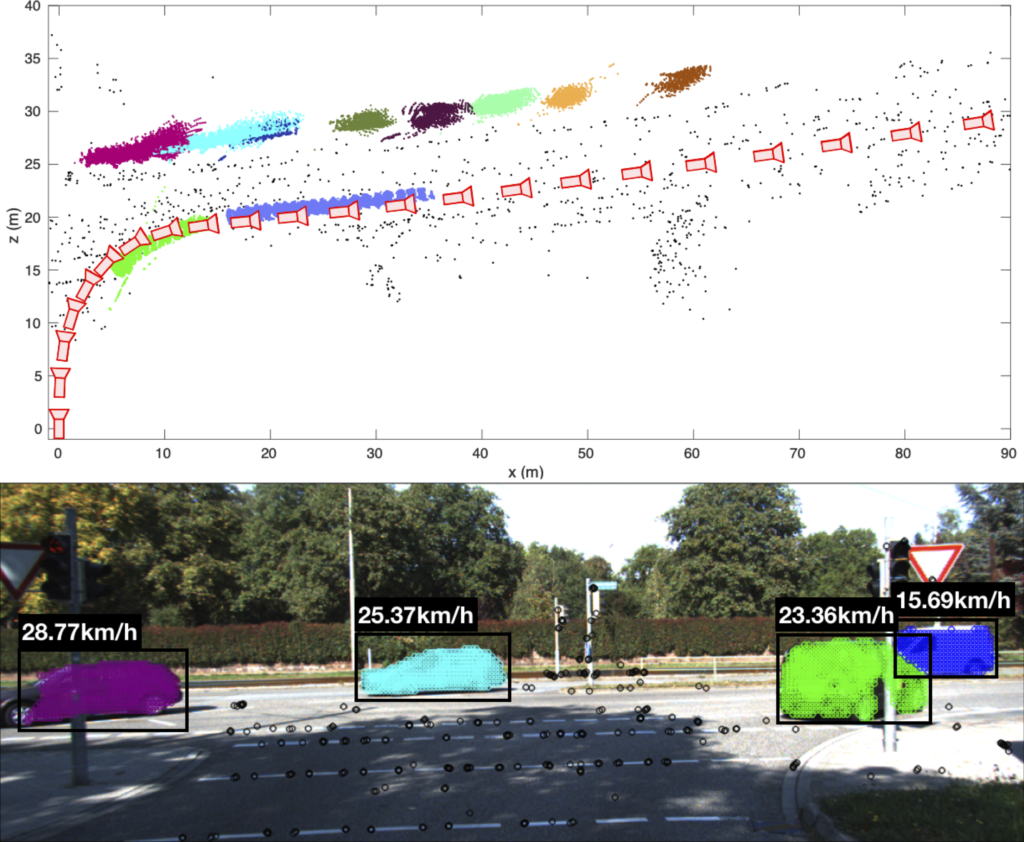

To achieve autonomy, a robot must be able to simultaneously build an accurate representation of the world, often called a map, and localise itself and other objects in the environment. Creating these maps is achieved by fusing multiple sensor measurements of the environment into a consistent representation using estimation techniques such as Simultaneous Localisation And Mapping (SLAM) or Structure From Motion (SFM). These estimation techniques have already revolutionized a wide range of applications from intelligent transportation, surveillance, inspection, entertainment and film production to exploration and monitoring of natural environments, amongst many others. Unfortunately, existing solutions to those problems rely heavily on the assumption that most parts of the environment are static. They tend to fail or perform poorly in complex, dynamic, real world scenarios where many objects are moving. Robotic Perception group at the University of Sydney is researching robust solutions to sensing, estimation, mapping and planning in dynamic environments.