The video for this talk is now available: https://www.youtube.com/watch?v=x5LPiUQHa6Q

When: Thurs 12th of Nov, 5p AEST

Where: This seminar will be presented online, RSVP here.

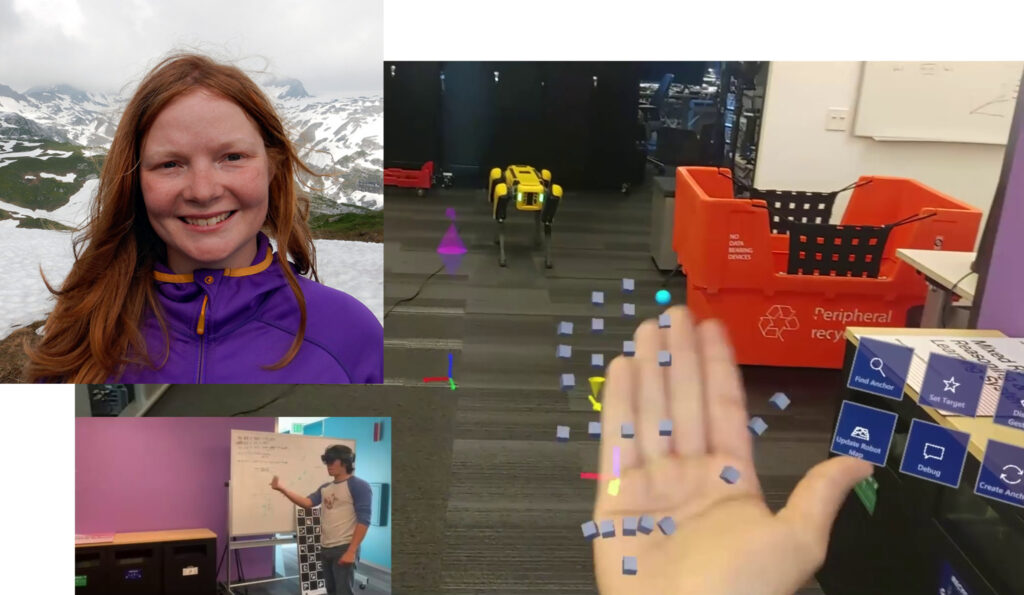

Speaker: Helen Oleynikova, Microsoft Mixed Reality and AI Lab, Zürich

Title: Mixed Reality, Volumetric Mapping, and Robotics: Sharing Spaces with Robots

Abstract: How do humans perceive the world? How should robots? Can we have some kind of shared spatial representation, that can both benefit people and give robots insight into the geometry and semantics of a scene?

This talk will focus on two aspects of answering those questions: (1) recent research on Signed Distance Field-based volumetric map representations, and how they can be used for path planning and localization, and (2) how Mixed Reality can bridge the gap between robots and humans, allowing humans to see robot intentions and interact in more natural ways, but also allowing robots and humans to share the same spatial information. For both parts, we will show experiments and results from real robots, and discuss how we see the future of robotics in these two overlapping spaces.

Bio: Helen Oleynikova is a Senior Scientist on the Mixed Reality Robotics team at the Microsoft Mixed Reality and AI Lab in Zürich, Switzerland. She has recently finished a PhD at the Autonomous Systems Lab at ETH Zürich with Prof. Roland Siegwart, where her thesis was on “Mapping and Planning for Safe Collision Avoidance On-board Micro-Aerial Vehicles”. She has worked on a variety of robotic platforms during her time in the field, including robotic boats, drones, and a variety of ground robots. She is passionate about the intersection of perception and action, and making real robots just a little bit more intelligent.