Check out our latest work! ACFR researchers will be presenting the following papers and presentations at the flagship robotics conference IEEE International Conference on Robotics and Automation (ICRA 2024), to be held in Yokohama, Japan 13- 17 May:

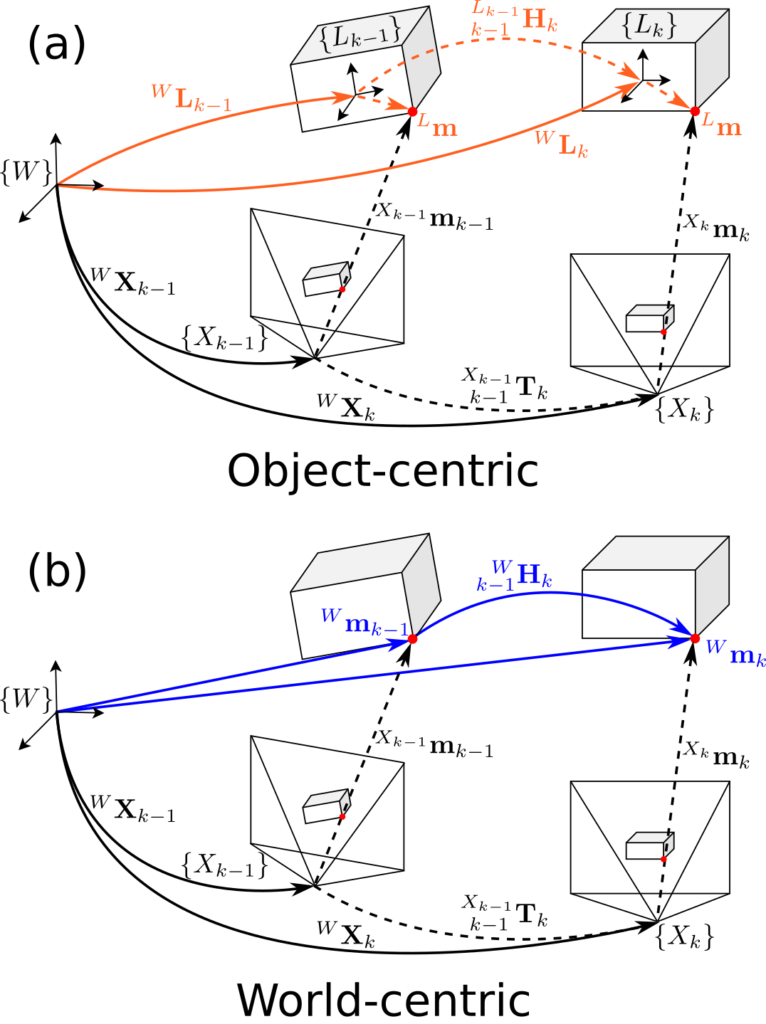

The Importance of Coordinate Frames in Dynamic SLAM

Jesse Morris, Yiduo Wang and Viorela Ila

Most Simultaneous localisation and mapping (SLAM) systems have traditionally assumed a static world, which does not align with real-world scenarios. To enable robots to safely navigate and plan in dynamic environments, it is essential to employ representations capable of handling moving objects. Dynamic SLAM is an emerging field in SLAM research as it improves the overall system accuracy while providing additional estimation of object motions. State-of-the-art literature informs two main formulations for Dynamic SLAM, representing dynamic object points in either the world or object coordinate frame. While expressing object points in their local reference frame may seem intuitive, it does not necessarily lead to the most accurate and robust solutions. This paper conducts and presents a thorough analysis of various Dynamic SLAM formulations, identifying the best approach to address the problem. To this end, we introduce a front-end agnostic framework using GTSAM [1] that can be used to evaluate various Dynamic SLAM formulations.

Preprint: hhttps://arxiv.org/abs/2312.04031

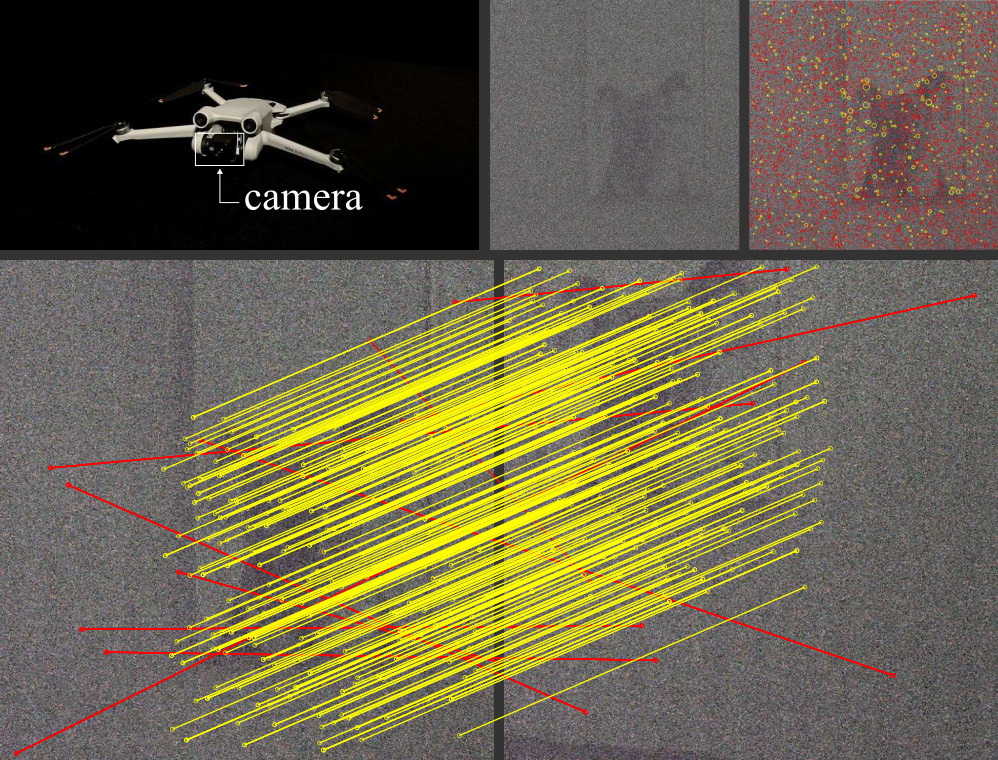

BuFF: Burst feature finder for light-constrained 3D reconstruction

A. Ravendran, M. Bryson and D. G. Dansereau

Robots operating at night using conventional vision cameras face significant challenges in reconstruction due to noise-limited images. Previous work has demonstrated that burst-imaging techniques can be used to partially overcome this issue. In this paper, we develop a novel feature detector that operates directly on image bursts that enhances vision-based reconstruction under extremely low-light conditions. Our approach finds keypoints with well-defined scale and apparent motion within each burst by jointly searching in a multi-scale and multi-motion space. Because we describe these features at a stage where the images have higher signal-to-noise ratio, the detected features are more accurate than the state-of-the-art on conventional noisy images and burst-merged images and exhibit high precision, recall, and matching performance. We show improved feature performance and camera pose estimates and demonstrate improved structure-from-motion performance using our feature detector in challenging light-constrained scenes. Our feature finder provides a significant step towards robots operating in low-light scenarios and applications including night-time operations.

Automation and Artificial Intelligence Technology in Surface Mining: State of the Art, Challenges and Opportunities

Raymond Leung, Andrew Hill and Arman Melkumyan

This survey article provides a synopsis of some of the engineering problems, technological innovations, robotic development, and automation efforts encountered in the mining industry, particularly in the Pilbara iron ore region of Western Australia. The goal is to paint the technology landscape and highlight issues relevant to an engineering audience to raise awareness of artificial intelligence (AI) and automation trends in mining. It assumes the reader has no prior knowledge of mining and builds context gradually through focused discussion and short summaries of common open-pit mining operations. The principal activities that take place may be categorized in terms of resource development and mine, rail, and port operations (Figure 1). From mineral exploration to ore shipment, there are roughly nine steps in between (Figure 2). These include geological assessment, mine planning and development, production drilling and assaying, blasting and excavation, transportation of ore and waste, crushing and screening, stockpiling and loading out, rail network distribution, and ore car dumping. The objective is to describe these processes and provide insights on some of the challenges/ opportunities from the perspective of a decade-long industry–university R&D partnership.

Paper: https://protect-au.mimecast.com/s/SJ-ICK1DvKTD2ZVxyhMBq-r?domain=arxiv.org

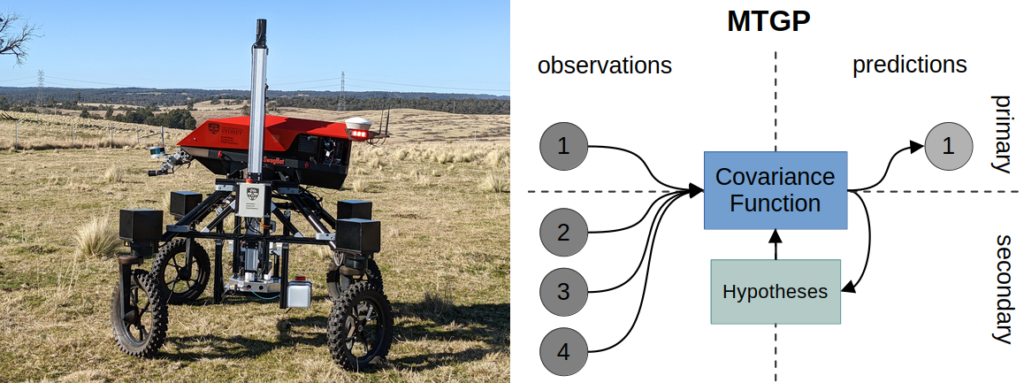

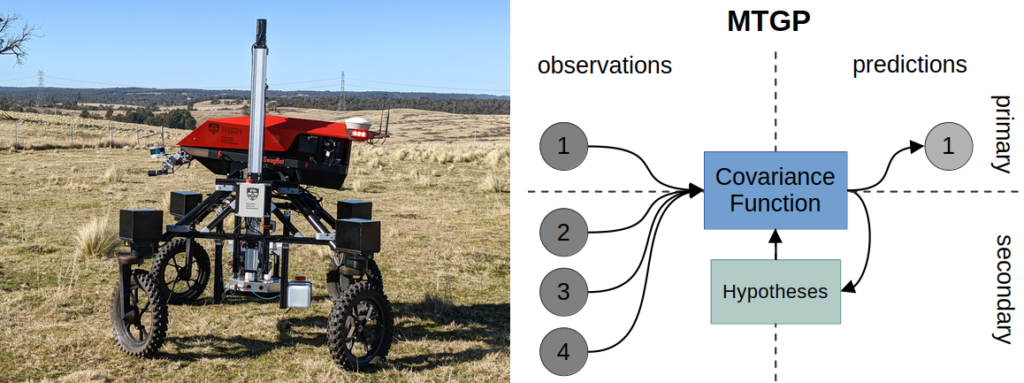

Automated Testing of Spatially-Dependent Environmental Hypotheses through Active Transfer Learning

Nicholas Harrison, Nathan Wallace and Salah Sukkarieh

The efficient collection of samples is an important factor in outdoor information gathering applications on account of high sampling costs such as time, energy, and potential destruction to the environment. Utilization of available a-priori data can be a powerful tool for increasing efficiency. However, the relationships of this data with the quantity of interest are often not known ahead of time, limiting the ability to leverage this knowledge for improved planning efficiency. To this end, this work combines transfer learning and active learning through a Multi-Task Gaussian Process and an information-based objective function. Through this combination it can explore the space of hypothetical inter-quantity relationships and evaluate these hypotheses in real-time, allowing this new knowledge to be immediately exploited for future plans. The performance of the proposed method is evaluated against synthetic data and is shown to evaluate multiple hypotheses correctly. Its effectiveness is also demonstrated on real datasets. The technique is able to identify and leverage hypotheses which show a medium or strong correlation to reduce prediction error by a factor of 1.4–3.4 within the first 7 samples, and poor hypotheses are quickly identified and rejected eventually having no adverse effect.

Preprint: https://arxiv.org/abs/2402.18064

Workshop – RoboNerF, Friday May 17

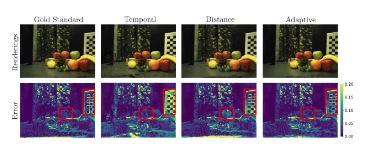

Adaptive Keyframe Selection for Online Iterative NeRF Construction –

Joshua Wilkinson, Jack Naylor, Ryan Griffiths and Donald G. Dansereau

We propose a method for intelligently selecting images for building neural radiance fields (NeRFs) from the large number of frames available in typical robot-mounted cameras. Our approach iteratively constructs and queries a NeRF to adaptively select informative frames. We demonstrate that our approach maintains high-quality representations with a 78% reduction in input data and reduced training time in single-pass mapping, while preventing unbounded growth of input frames in persistent mapping. We also demonstrate our adaptive approach outperforming non-adaptive spatial and temporal methods in terms of training time and rendering quality. This work is a step towards persistent robotic NeRF[1]based mapping.

Preprint: https://roboticimaging.org/Papers/wilkinson2024adaptive.pdf

Workshop – HERMES, Monday May 13

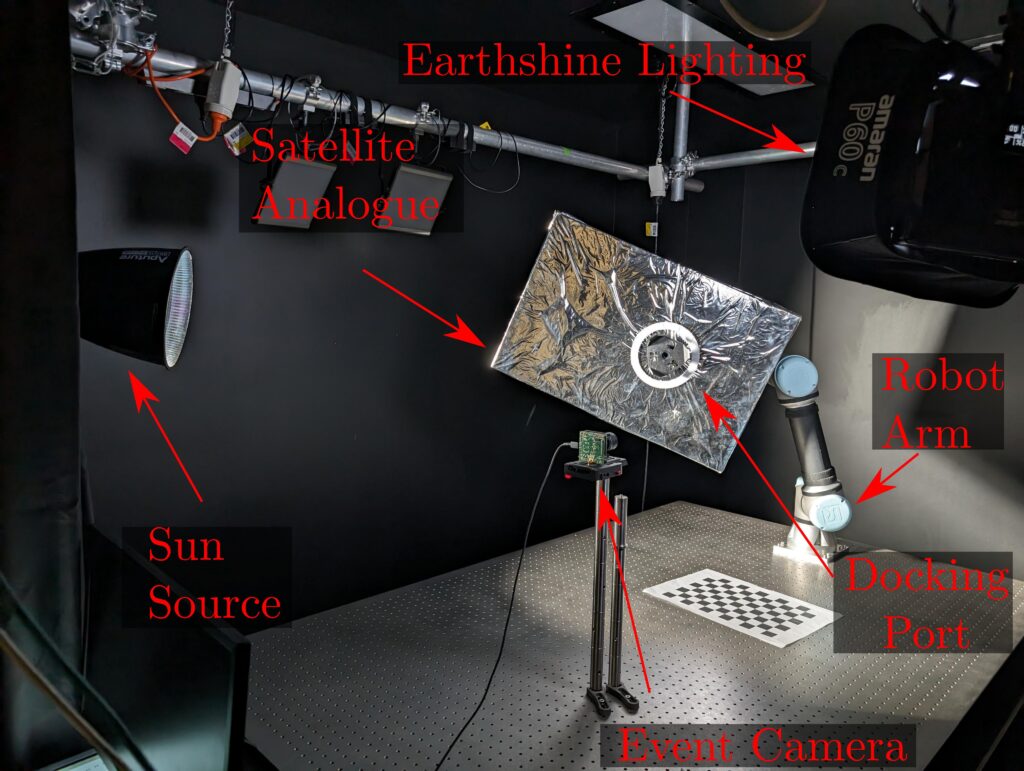

Towards Event-Based Satellite Docking: A Photometrically Accurate Low-Earth Orbit Hardware Simulation

Nuwan Munasinghe, Cedric Le Gentil, Jack Naylor, Mikhail Asavkin, Donald G. Dansereau and Teresa Vidal-Calleja

Automated satellite docking is a prerequisite for most future in-orbit servicing missions. Most vision-based solutions proposed use conventional cameras. However, conventional cameras face challenges due to extreme illumination conditions. Event cameras have been used in various applications because of their advantages over conventional cameras, such as high temporal resolution, higher dynamic range, low power consumption, and higher pixel bandwidth. This paper presents a hardware setup to simulate low earth orbit (LEO) conditions. The setup aims to show the suitability of event-based cameras for satellite docking applications. The developed test environment has lighting conditions similar to LEO, a mock[1]up satellite’s docking port following Lockheed Martin’s Mission Augmentation Port standard, and a robotic arm that can move the mock-up satellite to replicate movements in space. This paper shows the drawbacks faced by traditional cameras in LEO conditions, such as pixel saturation, resulting in feature loss. To overcome these limitations, this paper presents a port detection pipeline using event-based cameras. The proposed pipeline detects the docking port with an average error of 8.58 pixels in image space. This error compared to the image width and height is 2.48% and 3.30% respectively. Therefore, the proposed method provides promising results towards satellite docking using event cameras in the LEO environment where

illumination conditions are challenging.

Preprint: https://roboticimaging.org/Papers/munasinghe2024towards.pdf

Workshop – Monday 13 May

Autonomous Systems for Sustainable Agriculture – Livestock Example

Professor Salah Sukkarieh

Link: https://www.santannapisa.it/it/icra-2024-workshop-agri-robotics