When: 5th of February, 2:00pm AEDT

Where: This seminar will be partially presented at the ACFR seminar area, J04 lvl 2 (Rose St Building) and partially online via Zoom. RSVP

Speakers: Jaehyun Lim, Jonghak Bae, Jaewoong Han

Talk 1:

Title: ADMM-based Time-Optimal Nonlinear Model Predictive Control for Autonomous Vehicle Racing

Abstract:

In the past decade, many studies have proposed fast nonlinear model predictive control (NMPC) methods for autonomous racing based on sequential quadratic programming (SQP) and the real-time iteration (RTI) scheme, but they still have limitations in terms of trade-offs among numerical stability, performance, and execution speed. To address this limitation, we define a time-optimal NMPC problem with a separable objective function, thereby relaxing the time-optimization problem into a relatively easy-to-solve problem by separating it from vehicle dynamics and inequality constraints, and then propose a solution using the alternating direction multiplier method (ADMM). The resulting ADMM-SQP algorithm is embedded into an RTI scheme (ADMM-RTI), which performs a single SQP/ADMM step per control interval. Lap-time trial experiments demonstrate that ADMM-RTI achieves superior lap times and robust feasibility compared to several representative NMPC-based racing formulations, while maintaining median RTI solve times around 3.6-4.0 ms and worst-case times below 12 ms. Additional multi-vehicle simulations confirm that the signed-distance-field-based collision-avoidance formulation can be integrated into the proposed ADMM-RTI controller to enable safe overtaking maneuvers.

Bio:

Jaehyun Lim received the B.S. degree in mechanical engineering, in 2017, from Yonsei University, Seoul, South Korea. He is currently working toward his Ph.D. degree in the field of machine learning and control systems at Yonsei University. His research interests include machine learning, optimal control, robotics, and self-driving vehicles.

Talk 2:

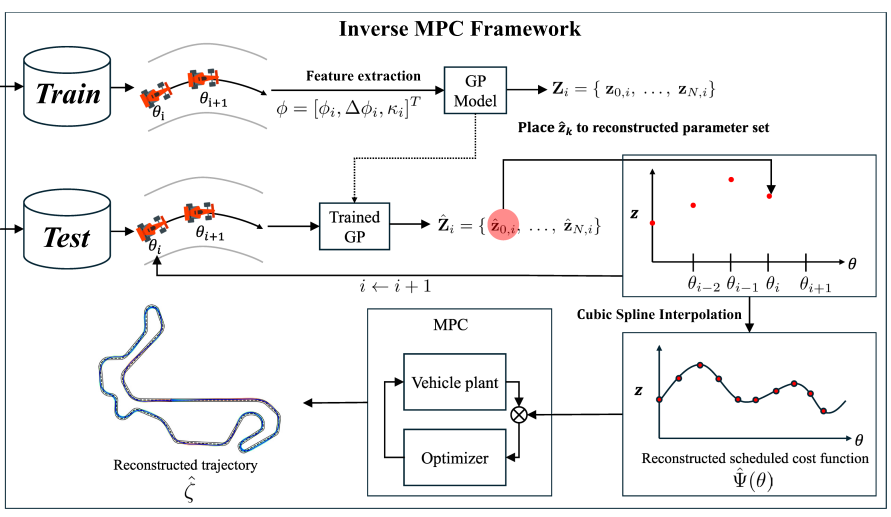

Title: Data-Driven Virtual Driving Instruction for Skill Learning and Expert Imitation in Racing

Abstract: Novice drivers in automobile racing face significant challenges in improving their performance while maintaining safety. Traditional one-on-one coaching systems suffer from several limitations, including subjective assessments, inconsistent teaching styles, and high costs. In this seminar, I present a virtual driving instructor framework for driver assistance systems in automobile racing, explored from multiple methodological perspectives. First, I introduce a learning-based driving tutoring system that classifies driver skill levels from driving data and identifies performance-deficient features from a racing perspective using SHAP (SHapley Additive exPlanations). Based on these identified weaknesses, a large language model (LLM) generates interpretable and actionable feedback to help novice drivers improve specific driving skills. Next, I discuss teaching approaches based on expert imitation through Model Predictive Control (MPC) cost function tuning, where novice drivers can be guided toward expert-like driving behavior even when their trajectories deviate from expert demonstrations. To this end, I briefly introduce two imitation frameworks: one that identifies scheduled MPC parameters using Gaussian Process (GP) models, and another based on differentiable MPC with strategy-based cost functions. Together, these approaches aim to provide a scalable, interpretable, and data-driven virtual coaching system for skill improvement in automobile racing.

Bio: Jonghak Bae Received the B.S. degree in robot engineering, in 2021, from Hanyang University, Ansan, South Korea. He is currently working toward the Ph.D. degree in mechanical engineering at Yonsei University, Seoul, South Korea. His research interests include machine learning and optimal control, with a strong focus on autonomous driving.

Talk 3:

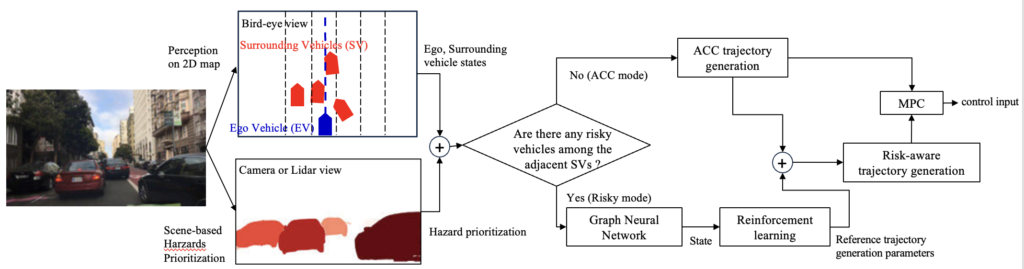

Title: RL-Enhanced Control for Risk-Aware Safety and Personalized Ride Comfort

Abstract: Modern intelligent vehicles struggle to balance dynamic safety with personalized ride quality. Traditional rule-based systems often fail to navigate complex hazards or adapt to diverse human preferences. First, I propose a Risk-Aware Adaptive Cruise Control (ACC). This system uses Graph Neural Networks (GNN) to encode surrounding vehicle states, allowing an RL agent to predict safety parameters for a Hazard-Prioritized MPC. This integration enables seamless transitions between reference tracking and real-time risk mitigation. Next, I present a Personalized Ride Comfort Controller for EVs using torque-based pitch control. By employing Reinforcement Learning from Human Feedback (RLHF), the system learns reward functions from binary feedback to schedule control gains. This optimizes torque distribution to minimize discomfort according to individual preferences. These approaches demonstrate how data-driven learning augments classical control to achieve robust safety and human-centric mobility.

Bio: Jaewoong Han Received the B.S. degree in robot engineering, in 2024, from Yonsei University, Seoul, South Korea. He is currently working toward the Ph.D. degree in mechanical engineering at Yonsei University, Seoul, South Korea. His research interests include machine learning, reinforcement learning, and self-driving vehicles.