When: Thursday 6th of April, 1pm AEST

Where: This seminar will be partially presented at the Rose Street Seminar area (J04) and partially online via Zoom

Speaker: Dr Ruigang Wang

Title: Direct Parameterization of Lipschitz-Bounded Deep Networks

Abstract:

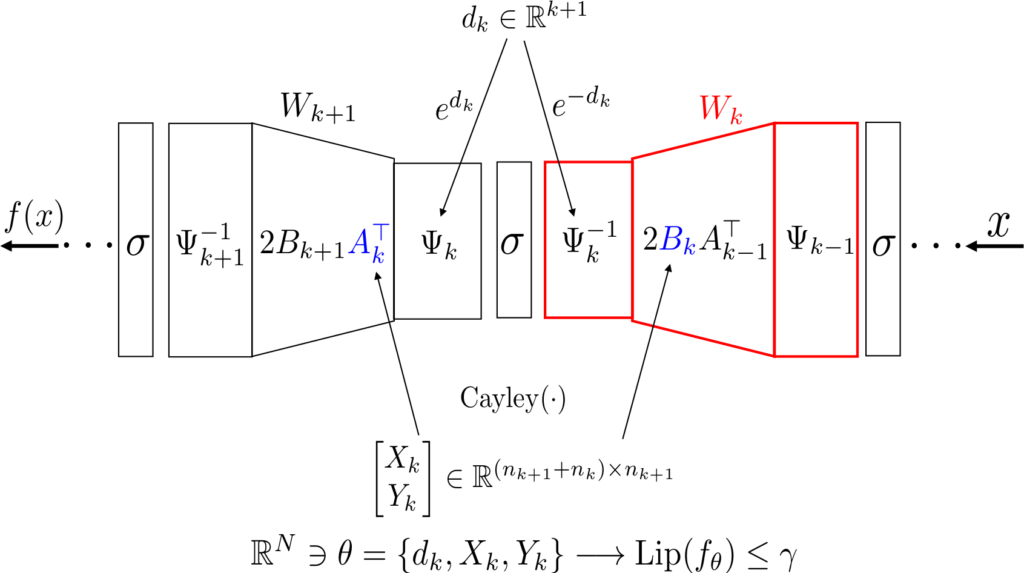

In this talk I will present a new parameterization of deep neural networks (both fully connected and convolutional) with guaranteed Lipschitz bounds, i.e. limited sensitivity to perturbations. The Lipschitz guarantees are equivalent to the tightest-known bounds based on certification via a semidefinite program (SDP), which does not scale to large models. In contrast to the SDP approach, we provide a “direct” parameterization, i.e. a smooth mapping from a Euclidean space onto the set of weights of Lipschitz-bounded networks. This enables training via standard gradient methods, without any computationally intensive projections or barrier terms. The new parameterization can equivalently be thought of as either a new layer type (the sandwich layer), or a novel parameterization of standard feedforward networks with parameter sharing between neighbouring layers. We illustrate the method with some applications in image classification.

Bio:

Ruigang Wang received his Ph.D. degree from UNSW in 2017. From 2017 to 2018 he worked as a postdoc at UNSW, focusing on distributed model predictive control for large-scale systems. Then, he works as a postdoc at ACFR since 2018. His research interests include robust machine learning and nonlinear control theory.