When: Thursday 1st of April, 1pm AEDT

Where: This seminar will be presented online, RSVP here.

Speakers: Ahalya Ravendran, Dr Tejaswi Digumarti and Dr Donald Dansereau

Title: Recent Developments in Robotic Imaging

Abstract:

Three speakers will present their recent work in helping robots to see and interpret the world:

Ahalya Ravendran: Burst imaging for light-constrained structure from motion

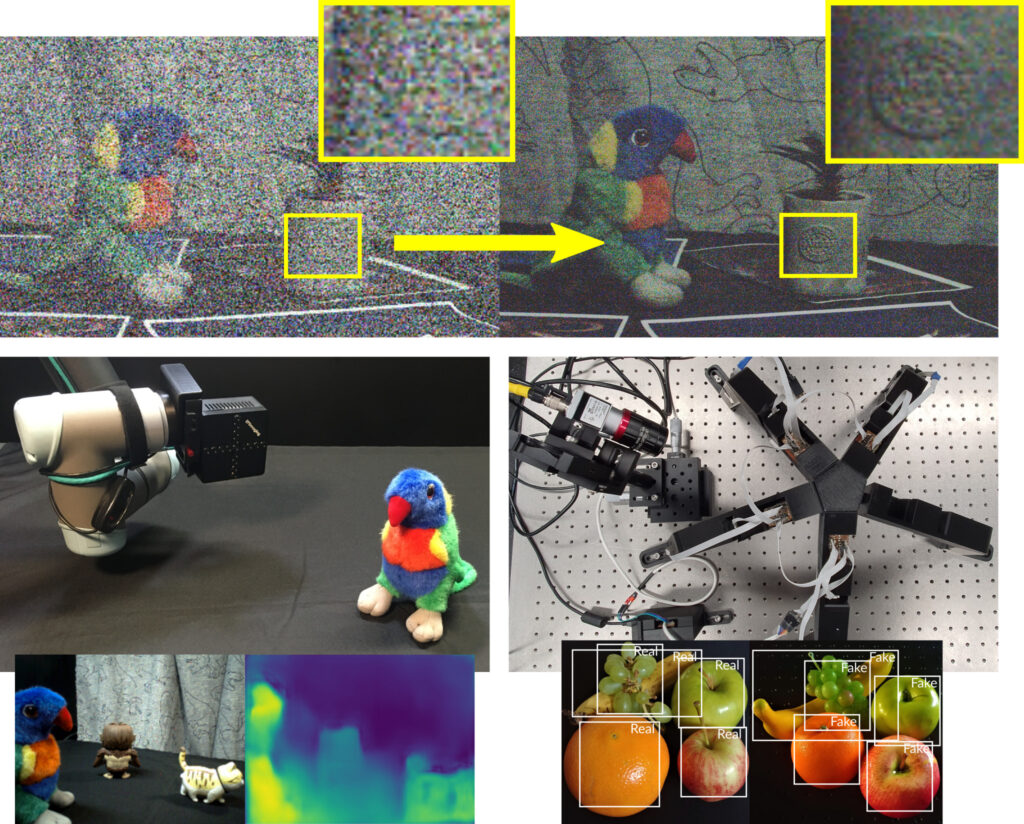

State-of-the art reconstruction methods perform well under good lighting conditions but fail in low light due to low signal to noise ratio. In this presentation we will establish the viability of adapting burst imaging to improve robotic vision by demonstrating light-constrained structure from motion with fewer spurious features, more accurate camera pose estimation and an ability to operate in previously prohibitive conditions.

Tejaswi Digumarti: Estimation of depth and visual odometry from sparse light field images

Light field (LF) cameras are part of a diverse set of new imaging devices that have the potential to dramatically improve robotic perception. However, their use in robotics applications has been limited due to challenges in interpreting and calibrating these cameras. This talk presents a step towards integrating sparse LF cameras in two robotics applications, namely depth and odometry estimation. A generalized encoding of the LF, capturing both geometry and appearance, that allows training deep neural networks to predict depth and odometry simultaneously through unsupervised learning will be presented.

Donald Dansereau: Multiplexed illumination for classifying visually similar objects

Distinguishing visually similar objects like forged/authentic bills, healthy/unhealthy plants, and real and synthetic fruit is beyond even the most sophisticated modern classifiers. We propose the use of multiplexed illumination to extend the range of objects that can be successfully discriminated. This work is a step toward generalising optimal multiplexing for tasks in robotic perception.

Bios:

Tejaswi Digumarti is a research associate at the Sydney Institute for Robotics and Intelligent Systems (SIRIS), University of Sydney. His research interests are 3D reconstruction, Semantic Segmentation and SLAM with a focus on natural environments with vegetation. He obtained his PhD in 2020, working at the Autonomous Systems Lab, ETH Zürich and at Disney Research, Zürich. Prior to that he obtained his Masters’ degree from ETH Zürich in 2014 and his Bachelors’ degree from IIT Jodhpur in 2012. When not programming robots, you can find him playing video games or wandering in the woods admiring creepy crawlies.

Ahalya Ravedran holds a Bachelor’s degree in Mechatronic Engineering from the Sri Lanka Institute of Information Technology and a Masters’ degree in Engineering Technology from Thammasat University, Thailand. She is a current PhD student at the Australian Centre for Field Robotics. Her research focuses on burst imaging for robotic vision in visually challenging conditions.

Donald Dansereau leads the Robotic Imaging group at SIRIS/ACFR, exploring how new imaging approaches can help robots see and do. In 2004 he completed an MSc at the University Calgary, receiving the Governor General’s Gold Medal for his work in light field processing. His industry experience includes physics engines for video games, computer vision for microchip packaging, and chip design for automated electronics testing. He completed a PhD on underwater robotic vision at the ACFR in 2014, and held postdoctoral appointments at QUT and Stanford before taking on his present role.