When: Thursday 17th of November, 1pm AEDT

Where: This seminar will be partially presented at the Rose Street Seminar area (J04) and partially online via Zoom, RSVP here.

Speakers: Donald Dansereau, Adam Taras, Ahalya Ravendran, Ryan Griffiths

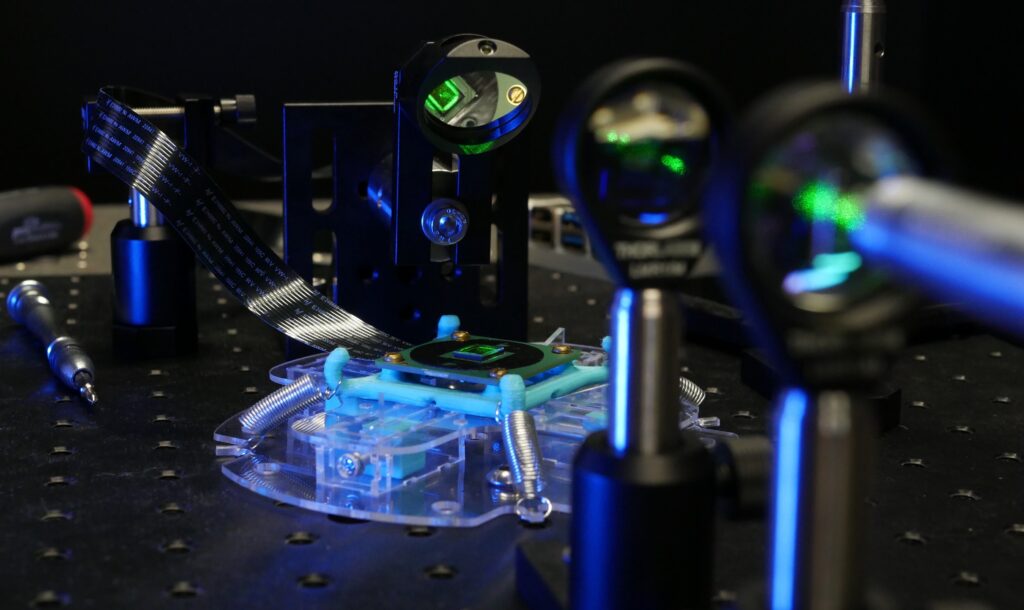

Title: Recent Developments in Robotic Imaging

Abstract:

After a brief introduction to the robotic imaging group, three speakers will present their recent work.

Adam Taras – Hyperbolic View Dependency for All-in-Focus Time of Flight Fields Time of flight (ToF). Cameras measure depth and intensity images, enabling improved scene representation and high-level decision making in robotic systems. However, they perform poorly at long ranges and around absorptive or specular objects. ToF fields measured by arrays of cameras have been proposed for mitigating these limitations. In this work we expose a previously undescribed hyperbolic view dependency in ToF fields, and exploit this to construct an all-in-focus filter that improves noise immunity and accuracy of depth estimation. These improvements increase the range of conditions in which ToF measurements can be relied upon by robotic systems.

Ahalya Ravendran – BuFF: Burst Feature Finder for Light-Constrained 3D reconstruction. Robots operating at night using conventional cameras face significant challenges in reconstruction due to noise-limited images. In this work, we develop a novel feature detector that operates directly on image bursts to enhance vision-based reconstruction under extremely low-light conditions. We demonstrate improved feature performance, camera pose estimates and structure-from-motion performance using our feature finder in challenging light-constrained scenes.

Ryan Griffiths – NOCaL: Calibration-Free Semi-Supervised Learning of Odometry and Camera Intrinsics. The need for bespoke models, calibration and low-level processing represents a key barrier to the deployment of camera in robotics. In this work we present NOCaL, Neural Odometry and Calibration using Light fields, a semi-supervised learning architecture capable of interpreting previously unseen cameras without calibration for the tasks of odometry and view synthesis.

Bio:

Adam Taras is a final year B Engineering (Mechatronics with Space Major) / B Science (Physics) student at The University of Sydney. Since 2019 he has worked with the robotic imaging group on projects including 3D hyperspectral imaging, doppler time of flight cameras, and privacy-enhanced robotic vision. Adam’s future research interests include computational imaging and novel cameras for robotics applications.

Ahalya Ravendran is a PhD student in the Robotic Imaging group at ACFR. She received her BSc honours degree in mechatronics engineering from Sri Lanka Institute of Information Technology and her MSc research degree in robotics from Thammasat University, Thailand. Her research is focused on finding novel ways to allow robots to operate in low light.

Ryan Griffiths is a second-year PhD Student at ACFR, having finished his undergraduate studies in mechatronics engineering at the University of Sydney. His research interests are in using machine learning to allow robotic platforms to better understand and interpret the data they receive from their sensors.

Since 2018 Don Dansereau has lead the Robotic Imaging group at the ACFR, exploring how new imaging approaches can help robots see and do. In 2004 he completed an MSc at the University Calgary, receiving the Governor General’s Gold Medal for his work in light field processing. His industry experience includes physics engines for video games, computer vision for microchip packaging, and chip design for automated electronics testing. He completed a PhD on underwater robotic vision at the ACFR in 2014, and held postdoctoral appointments at QUT and Stanford before taking on his present role.