When: Thursday 29th of May, 1:00pm AEST

Where: This seminar will be partially presented at the ACFR seminar area, J04 lvl 2 (Rose St Building) and partially online via Zoom. RSVP

Speaker: Jay Zhang

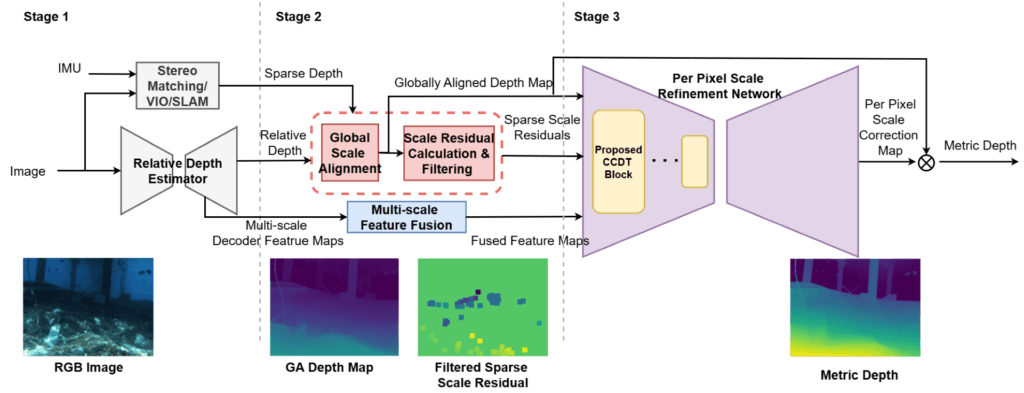

Title: Underwater Depth Estimation Pipeline with Sparse Depth Measurements

Abstract:

Underwater infrastructure requires regular inspection and maintenance due to the harsh and degrading marine environment. Current inspection methods, relying on human divers or remotely operated vehicles, face significant limitations from perceptual constraints and operational risks, especially around complex structures or in turbid conditions. Enhancing spatial awareness through accurate depth estimation can mitigate these risks and enable better autonomy in underwater inspection and intervention tasks. This seminar presents an underwater monocular depth estimation pipeline that integrates monocular relative depth estimation with sparse depth measurements, producing real-time dense depth estimates with metric scale. The proposed pipeline leverages sparse metric depth points to scale relative depth predictions and employs a refinement network based on the proposed Cascade Conv-Deformable Transformer block, specifically designed to enhance performance. Preliminary evaluations using various underwater datasets—including forward and downward-looking imagery—demonstrate improvements in accuracy and generalization over baseline methods. The goal is a robust, generalised solution capable of efficient real-time execution on embedded systems, significantly aiding underwater autonomous inspection and intervention tasks.

Bio:

Jay Zhang is currently a PhD student supervised by Stefan Williams, Viorela Ila and Gideon Billings at ACFR Marine group. Jay completed Bachelor of Engineering and Bachelor of Commerce from University of Sydney, where he developed foundational skills and interests in marine robotics. Jay’s research focuses on underwater perception for autonomous intervention operations, emphasizing the development of robust depth perception and scene representations through multi-sensor fusion of visual, acoustic, and inertial data. His goal is to enhance the autonomous capabilities of underwater robotic systems, enabling effective navigation, inspection, and interaction within complex underwater environments.